The Impact of AI on Music Copyright Enforcement

Deep Learning for Music Recognition

Deep learning models, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), are revolutionizing music recognition. These sophisticated architectures can analyze audio features, such as pitch, rhythm, and timbre, to identify musical pieces with remarkable accuracy. Beyond simple audio matching, deep learning allows for the extraction of intricate patterns and relationships within the music, enabling applications that go far beyond basic identification. For instance, these techniques can be used to classify musical genres, analyze musical emotions, and even identify the instruments used in a recording, providing a far more nuanced understanding of the audio content than traditional methods.

The ability of deep learning to learn complex representations from vast datasets of musical audio allows for the development of more robust and adaptable music recognition systems. This capability is crucial for tasks like automatically tagging music in large libraries, enabling personalized recommendations, and even creating new forms of musical analysis tools. Furthermore, the continuous expansion of these models with more training data leads to a constant improvement in their accuracy and effectiveness.

AI-Powered Music Composition and Generation

Artificial intelligence is not just about recognizing existing music; it's also about creating new music. AI algorithms are now capable of composing original pieces in various styles, from classical symphonies to modern pop songs. These algorithms learn from existing musical datasets, identifying patterns and structures, and then using this knowledge to generate novel compositions. This innovative application of AI opens up exciting possibilities for musicians and artists, providing a new tool for creativity and inspiration.

The process often involves machine learning models that analyze musical structures, harmonies, and melodies from existing compositions. This analysis allows the AI to develop its own unique style and approach to music creation, resulting in surprising and sometimes truly innovative pieces. The potential for AI to collaborate with human composers is also significant, with AI providing ideas and variations that human musicians can then refine and develop.

Music Information Retrieval (MIR) Enhanced by AI

Music Information Retrieval (MIR) systems are being significantly enhanced by AI techniques. Traditional MIR methods often struggle with complex musical structures and nuanced characteristics. AI, however, can be deployed to address these challenges. AI algorithms can identify subtle variations in tempo, dynamics, and instrumentation, providing a more comprehensive and accurate analysis of the musical content.

This improved accuracy is crucial for tasks like tagging music with metadata, creating personalized playlists, and enabling advanced musical analysis. By understanding the nuances of music at a deeper level, AI can provide more insightful and useful information to users and researchers alike. This is critical for fields like musicology, where detailed analysis of musical features is essential for understanding historical trends and artistic evolution.

Personalized Music Recommendations

AI is transforming how we experience music by personalizing music recommendations. Traditional recommendation systems often rely on basic user preferences, leading to limited and predictable suggestions. Sophisticated AI models, however, can analyze a user's listening history, preferences, and even emotional responses to music, providing more tailored and engaging recommendations.

These AI-powered systems go beyond simply suggesting similar songs. They can proactively suggest music that might appeal to the user based on subtle patterns in their listening habits, emotions, or even contextual information, like the mood or atmosphere they are seeking at a particular time. This level of personalization is crucial for creating more engaging and meaningful music experiences for individual listeners.

AI for Musical Analysis and Research

Beyond individual user experiences, AI is revolutionizing musical analysis and research. Researchers can now leverage AI to analyze vast datasets of musical compositions, identify patterns and trends, and gain deeper insights into musical history and evolution.

AI algorithms can automatically transcribe musical scores, identify thematic similarities across different pieces, and even analyze the emotional content of music. This automation allows researchers to process massive amounts of data, uncover hidden relationships, and make discoveries that were previously impossible. This leads to a more comprehensive understanding of music and its impact on culture and society.

The Future of Music Copyright Protection in the AI Era

Challenges Posed by AI Music Generation

The rise of AI-powered music generation tools presents significant challenges to existing copyright frameworks. These tools can create incredibly realistic and complex musical pieces, often drawing inspiration from vast libraries of copyrighted music. Determining authorship and ownership in these cases becomes a complex legal and ethical minefield. How do we attribute creativity when a machine learns from and then reinterprets existing works? This raises concerns about the potential for widespread copyright infringement, particularly for artists whose work might be used without their knowledge or permission in the training data for these generative models.

Furthermore, the sheer volume of music generated by AI presents a practical hurdle for copyright enforcement. Traditional methods of monitoring and tracking unauthorized use might prove insufficient to combat this rapidly evolving technology. Copyright holders will face a significant challenge in identifying and addressing instances of AI-generated music that mimic or borrow from their works. The speed and scale of AI music production demand innovative solutions for protecting creators' rights in this new digital landscape.

The blurring lines between human and artificial creativity also raise questions about the very definition of authorship. If an AI can produce a novel musical piece, does that piece belong to the programmer, the AI itself, or the user who prompts the AI? These fundamental questions about ownership and responsibility need to be addressed in order to foster a healthy and sustainable ecosystem for both AI-generated music and traditional musical creations.

Navigating the Legal Landscape

To effectively address the challenges of AI music copyright, a multi-faceted approach is required. International collaborations and standardized legal frameworks are crucial for establishing clear guidelines and consistent protections across different jurisdictions. This includes defining the responsibilities of AI developers, platform owners, and users involved in the creation and dissemination of AI-generated music. These new frameworks must be adaptable and responsive to the rapid advancements in AI technology.

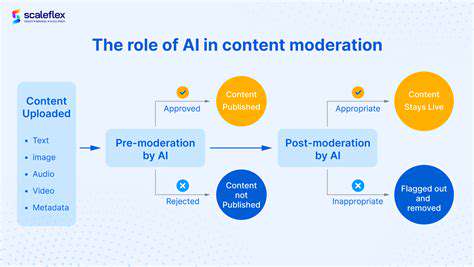

One important area for consideration is the development of new technological tools that can effectively identify and attribute AI-generated music. This might involve watermarking techniques or sophisticated audio fingerprinting methods to distinguish original works from AI-generated imitations. Robust data sharing protocols will also be essential to help track the origin and usage of music data used to train AI models.

Ultimately, navigating the legal landscape surrounding AI-generated music requires a dynamic and collaborative effort between artists, AI developers, legal experts, and policymakers. The goal is to create a system that recognizes the value of both human and artificial creativity while ensuring fair compensation for creators and protecting the integrity of the music industry in the face of this transformative technology.

Read more about The Impact of AI on Music Copyright Enforcement

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content