Debunking Myths: The Realities of AI in Creative Industries

Data: The Fuel of AI

Data is the lifeblood of any artificial intelligence system. It's the raw material that algorithms process to learn, identify patterns, and make predictions. Without a substantial and diverse dataset, AI models are essentially empty vessels. The quality and quantity of data directly impact the accuracy and effectiveness of AI applications. This is crucial to remember, as simply accumulating vast amounts of data isn't enough; the data must be relevant, reliable, and appropriately formatted for optimal results.

The sheer volume of data available today is unprecedented. From social media posts to sensor readings, the digital world generates petabytes of information every day. However, not all data is created equal. Garbage in, garbage out is a very real concern in AI development. Carefully curated, cleansed, and labeled data is essential for training effective AI models.

Algorithms: The Brain of AI

Algorithms are the set of rules and procedures that AI systems use to process data and generate results. They act as the brain of an AI system, enabling it to learn from data, identify patterns, and make decisions. Different algorithms are suited for different tasks, reflecting the diverse needs of modern applications.

From simple linear regressions to complex neural networks, the choice of algorithm significantly impacts the performance and capabilities of an AI system. Understanding the strengths and weaknesses of various algorithms is essential for developing effective AI solutions.

The Interplay of Data and Algorithms

The relationship between data and algorithms in AI is symbiotic. Effective algorithms require high-quality, relevant data to function optimally. Similarly, sophisticated algorithms can extract valuable insights from vast and complex datasets that would be impossible for humans to analyze alone.

This interplay is crucial in achieving desired outcomes. Poor data combined with a sophisticated algorithm will still yield suboptimal results. Conversely, excellent data processed by a rudimentary algorithm can achieve impressive outcomes.

Bias in Data and Algorithms

A significant concern surrounding AI is the potential for bias present in the data and algorithms themselves. If the training data reflects societal biases, the AI system may perpetuate and even amplify these biases in its predictions and decisions. This can lead to unfair or discriminatory outcomes.

Addressing bias requires careful consideration of data collection methods, algorithm design, and ongoing monitoring and evaluation of AI systems. It's a critical area demanding continuous attention to ensure fairness and ethical application.

Ethical Considerations in AI Development

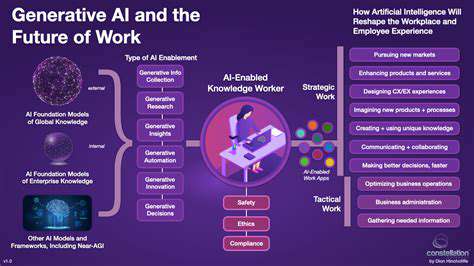

As AI systems become more sophisticated, the ethical implications of their use become increasingly important. Questions about accountability, transparency, and the potential for misuse must be addressed proactively. The development and deployment of AI must be guided by ethical principles to ensure responsible innovation.

It's not just about the technology itself, but also about the societal impact it has. Careful consideration of ethical dilemmas surrounding data privacy, job displacement, and algorithmic decision-making is essential to mitigate potential risks and maximize benefits.

The Future of Data and Algorithms in AI

The field of artificial intelligence is rapidly evolving, with ongoing research pushing the boundaries of what's possible. New data sources and advanced algorithms are constantly emerging, promising greater accuracy, efficiency, and versatility in AI applications. The future of AI will depend heavily on our ability to harness these advancements responsibly and ethically.

As data volumes grow exponentially and algorithms become more complex, the need for robust frameworks and regulations to guide their development and deployment will only increase. This is a dynamic field that demands ongoing attention and adaptability.

Real-World Applications

Data and algorithms are already transforming various industries, from healthcare to finance to transportation. AI-powered diagnostics can improve medical outcomes, while personalized financial recommendations can help manage investments more effectively. Autonomous vehicles are leveraging algorithms and data to navigate complex environments.

The practical applications of AI are constantly expanding, and the future holds even more innovative and impactful uses. Understanding the interplay between data and algorithms is key to harnessing the full potential of AI while mitigating potential risks.

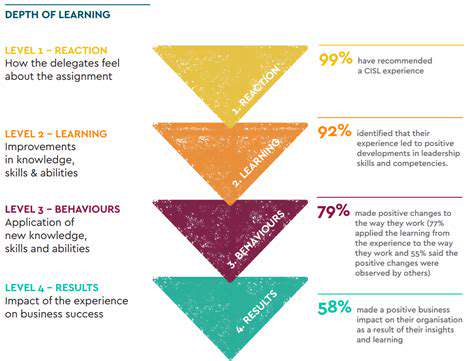

Traditional education often emphasized rote memorization, focusing on the regurgitation of facts and figures. This approach, while having its place, frequently lacked a focus on deeper understanding and critical analysis. Modern educational approaches increasingly prioritize critical thinking skills, encouraging students to question, analyze, and synthesize information. This transformation reflects a broader societal shift towards problem-solving and innovation.

Navigating the Ethics of AI in Creative Work

Defining AI in Creative Work

Artificial intelligence (AI) is rapidly transforming various sectors, including creative work. Understanding AI's role, from basic image generation to complex narrative creation, is crucial for navigating its ethical implications. AI tools are increasingly capable of producing art, music, writing, and other creative outputs, prompting questions about authorship, originality, and the very definition of creativity itself. This evolution necessitates a thoughtful examination of the ethical landscape surrounding AI's use in creative endeavors.

The Authorship Dilemma

One of the central ethical challenges is determining authorship when AI is involved in creative work. If an AI generates a piece of art or a story, who is the creator? Is it the human prompting the AI, the AI itself, or a collaborative effort between the two? Establishing clear lines of responsibility and credit is essential for fostering transparency and ensuring fair attribution in the creative process.

Originality and Creativity

Does AI-generated work truly possess originality? Can it emulate human creativity, or is it simply replicating existing patterns and styles? The question of originality is complex, particularly as AI systems learn from vast datasets of human-created content. This raises concerns about the potential for plagiarism and the importance of developing ethical guidelines to distinguish between authentic human creativity and AI-mimicking output.

Bias and Representation

AI systems are trained on existing data, which often reflects societal biases. This can lead to AI-generated creative work perpetuating harmful stereotypes or lacking representation of diverse perspectives. Addressing these biases is crucial to ensure that AI tools contribute to a more inclusive and equitable creative landscape. Developers must prioritize data diversity and implement mechanisms to mitigate bias.

Intellectual Property Rights

The application of intellectual property rights to AI-generated works is another critical area of ethical consideration. Who owns the rights to a piece of creative work generated by AI? Is it the programmer, the user who prompts the AI, or the AI itself? Establishing clear intellectual property frameworks is essential to avoid legal disputes and incentivize innovation in the field.

Impact on Human Artists and Creators

The rise of AI in creative fields inevitably raises concerns about the impact on human artists and creators. Will AI displace human talent? How can human artists adapt and thrive in a world increasingly incorporating AI tools? Exploring potential solutions, such as upskilling initiatives and collaborative approaches, is crucial to ensure a smooth transition and maximize the benefits of AI for both humans and machines.

Transparency and Accountability

Transparency in the creative process is paramount when AI is involved. Users should be informed about the extent to which AI has participated in the creation of a work. This transparency fosters trust and allows for a more informed assessment of the creative product. Furthermore, establishing accountability mechanisms for AI-generated outputs is vital, particularly regarding issues like plagiarism and bias.

Read more about Debunking Myths: The Realities of AI in Creative Industries

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content