The Role of Moderation in Maintaining Quality UGC

Relevance and Timeliness

Content decays faster than ever in our 24/7 news cycle. What mattered yesterday might be irrelevant today. The most successful content creators develop a sixth sense for emerging trends while maintaining a backlog of evergreen material. Pro tip: Set up Google Alerts for your niche and monitor social media discussions to stay ahead of the curve.

Originality and Uniqueness

In a sea of recycled content, originality isn't just valuable - it's oxygen for your brand. I once interviewed a chef who said, If you're not putting your fingerprint on the recipe, you're just copying someone else's homework. The same applies to content creation. Your unique perspective is what makes your work stand out from AI-generated text and competitor copy.

Engagement and Interactivity

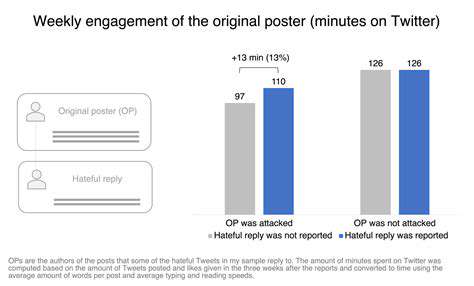

The days of passive content consumption are over. Modern audiences want to participate, not just observe. Consider how you can turn monologues into dialogues - maybe through thought-provoking questions, polls, or challenges that invite response. Remember: Engagement isn't about gimmicks; it's about creating genuine connection points.

Consistency and Style

Your content's voice should be as recognizable as a friend's handwriting. Whether you're crafting a tweet or a whitepaper, maintaining a consistent tone helps build familiarity and trust. Create a style guide that goes beyond grammar rules to capture your brand's personality - are you the knowledgeable professor or the approachable mentor? Define it, then live it in every piece you produce.

The Future of UGC Moderation: Adapting to Evolving Challenges

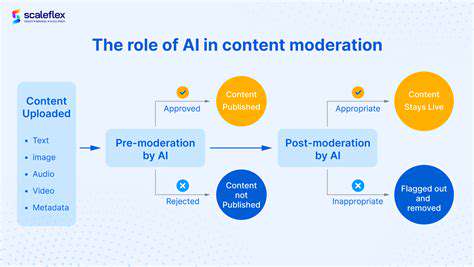

The Rise of AI-Powered Moderation

Moderation at scale requires tools that can keep pace with human creativity - for better and worse. Today's AI systems act like digital bloodhounds, sniffing out problematic content with frightening accuracy across languages and media formats. What makes this technology revolutionary isn't just its speed, but its ability to learn from context - distinguishing between harmless slang and genuine threats with increasing precision.

The most advanced systems now employ a technique called continuous learning, where each moderation decision helps refine future judgments. This creates a virtuous cycle where the AI becomes more nuanced in its understanding of cultural context and intent. Still, we're far from replacing human judgment entirely - the sweet spot lies in AI handling clear violations while humans tackle edge cases.

Challenges and Considerations

Bias in AI systems often reflects our own blind spots back at us. I once reviewed a moderation system that flagged legitimate discussions about racial inequality as hate speech while missing subtle but harmful microaggressions. Fixing this requires diverse training data and constant vigilance - like teaching a child right from wrong through countless examples and course corrections.

Transparency reports have become the new trust currency for platforms. Users deserve to know why their content was removed and how to appeal. The most forward-thinking companies now provide moderation receipts - detailed explanations showing exactly which policy was violated and where in the content. This approach turns frustrating experiences into teachable moments.

The free speech debate around AI moderation often misses a crucial point: online platforms are private spaces, not public squares. Still, with great power comes great responsibility. Striking the right balance requires input from linguists, ethicists, and community representatives - not just engineers and lawyers. Some platforms are now creating citizen review boards to oversee contentious moderation decisions, blending AI efficiency with human wisdom.

Looking ahead, the most successful moderation strategies will combine AI's scalability with human empathy. Imagine systems that don't just remove harmful content but actively promote constructive dialogue - nudging users toward better behavior rather than just punishing bad actions. This positive approach could transform online spaces from battlegrounds into communities.