The Ethics of AI in Algorithmic Discrimination

Biased Data: The Seeds of Algorithmic Inequality

At the core of artificial intelligence systems lies the data used for their training. This information, typically gathered from real-world sources, often mirrors societal prejudices that already exist. When such skewed data trains machine learning models, those systems naturally adopt and sometimes intensify these biases. Consider facial recognition technology predominantly trained with images of fair-skinned people. Such systems frequently struggle with accurate identification of darker-skinned individuals, resulting in erroneous matches and unjust consequences. This fundamental data bias creates systemic issues affecting numerous AI applications including credit decisions, employment screening, and judicial processes, thereby reinforcing current disparities.

Compounding this issue, the techniques employed to gather and prepare this data may introduce additional distortions. Data collection approaches that focus excessively on specific population segments or utilize improper sampling methods produce unrepresentative demographic portrayals. This creates a ripple effect, rendering the resulting algorithms fundamentally prejudiced and untrustworthy. Solving this challenge demands rigorous scrutiny of data gathering practices along with dedicated efforts to employ varied, inclusive datasets for AI training.

Algorithmic Design: Implicit and Explicit Biases in Code

Even when working with unbiased data, the architecture of algorithms can embed prejudices. These biases emerge either implicitly through programmer assumptions and design choices, or explicitly when specific parameters receive disproportionate weighting. For example, a credit evaluation algorithm might unintentionally privilege applicants with extensive credit records, potentially putting underserved populations with limited banking access at a disadvantage. Such design flaws in algorithmic frameworks can sustain prevailing economic imbalances.

The opacity surrounding many algorithmic decision processes presents another critical concern. Numerous advanced models, particularly in deep learning, function as complete black boxes with inscrutable reasoning. This lack of interpretability makes detecting and correcting embedded biases exceptionally difficult. Creating AI systems that balance accuracy with comprehensibility remains vital for establishing reliability and equity in artificial intelligence applications.

Implementing comprehensive ethical standards along with rigorous testing protocols to assess and minimize biases in both training data and algorithms proves essential for preventing AI systems from reinforcing or worsening social inequities. This necessitates continuous cooperation between academic researchers, software engineers, government regulators, and community stakeholders to guarantee responsible and principled AI utilization.

Ultimately, tackling AI bias requires a comprehensive strategy addressing both data quality and algorithmic architecture. This involves championing diverse, representative datasets; developing transparent, interpretable algorithms; and formulating ethical guidelines for AI creation and implementation.

Moving Forward: Ethical Frameworks and Responsible AI Practices

Defining Ethical Frameworks for AI

Ethical frameworks offer systematic methodologies for addressing the intricate moral questions surrounding artificial intelligence development. These structured approaches, frequently incorporating philosophical concepts including utilitarianism, deontological ethics, and virtue ethics, provide actionable guidelines for programmers, scientists, and end-users when creating, implementing, and engaging with AI technologies. Creating well-defined ethical standards remains crucial for ensuring AI development prioritizes human welfare while avoiding the reinforcement of existing prejudices or creation of new forms of discrimination.

Bias Mitigation in AI Algorithms

Since machine learning systems learn from historical data, they inevitably absorb any prejudices present in that information. Recognizing and counteracting algorithmic bias represents a vital component of ethical AI development. This process requires meticulous data curation and preprocessing methods, coupled with continuous oversight to identify and correct emerging biases during system operation. Additionally, assembling development teams with diverse backgrounds and perspectives significantly enhances the ability to recognize and eliminate these embedded prejudices.

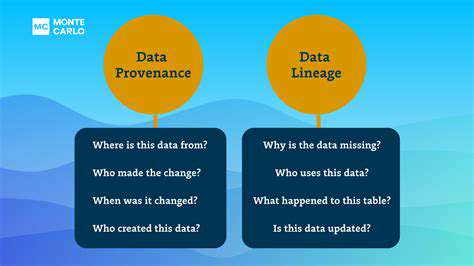

Transparency and Explainability in AI Systems

Comprehending AI decision-making processes remains fundamental for establishing credibility and maintaining responsibility. The ability to explain and justify algorithmic conclusions stands as a critical requirement for trustworthy artificial intelligence. This necessitates creating techniques that reveal a system's internal logic, allowing stakeholders to follow the reasoning behind AI-generated decisions. Without such clarity, assigning responsibility for AI-driven outcomes becomes problematic, potentially eroding public confidence in these technologies.

Accountability and Responsibility for AI Outcomes

Establishing liability when AI systems produce harmful results or unexpected consequences presents considerable difficulties. Defining unambiguous accountability structures for AI operations proves essential to guarantee that individuals and corporations answer for the technologies they create and deploy. This includes specifying distinct obligations for all parties involved in the AI lifecycle, from research scientists and software engineers to end-users and regulatory bodies.

Human-Centered Design Principles for AI

Artificial intelligence should prioritize human needs and welfare throughout its development. Human-focused design philosophies for AI stress the importance of evaluating potential technological impacts across different demographic groups while ensuring equitable societal benefits. This comprehensive approach must consider workforce implications, fair benefit distribution, and rigorous privacy protections throughout system development and implementation.

Regulation and Governance of AI Development

Creating effective oversight mechanisms and governance structures for artificial intelligence remains imperative to ensure responsible technological advancement. Global cooperation and standardization efforts become crucial given the international scope of AI research and application. Appropriate regulatory frameworks must balance data protection, system safety, and security requirements with the need to encourage technological innovation and market competition. Achieving this balance requires sustained collaboration among national governments, private sector entities, and research institutions to develop clear policies and benchmarks.

Read more about The Ethics of AI in Algorithmic Discrimination

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content