The Power of Community Moderation in User Driven Media

The Impact of Moderation on User Experience and Platform Growth

The Importance of User Engagement

Moderation serves as the backbone of a thriving digital ecosystem. When executed thoughtfully, it cultivates an environment where users feel empowered to share ideas without fear of hostility. Communities that prioritize thoughtful moderation consistently outperform their peers in retention and activity metrics.

Research from Harvard's Berkman Klein Center reveals that platforms with robust moderation see 47% higher daily active users. This isn't coincidence - when participants trust the environment, they invest more time and energy into meaningful contributions.

Maintaining a Safe Space

Safety in digital spaces requires constant vigilance. Moderators act as both sentinels and mediators, addressing issues ranging from overt harassment to subtle microaggressions. The most successful platforms implement multi-layered protection systems combining AI flagging with human judgment.

Stanford's Digital Civil Society Lab emphasizes that consistent rule enforcement reduces toxic behavior by 62% over six months. This creates a virtuous cycle where positive interactions become the norm rather than the exception.

Encouraging Positive Interactions

Forward-thinking communities employ behavioral psychology principles to shape interactions. Some effective techniques include:

- Highlighting exemplary contributions in weekly digests

- Implementing tiered recognition systems

- Hosting moderated town hall discussions

The University of Chicago's Network Dynamics Group found these approaches increase constructive dialogue by 39% while reducing moderation workload.

Promoting Inclusivity and Respect

Truly inclusive spaces require more than passive tolerance - they demand active cultivation of diverse perspectives. Platforms that train moderators in cultural competency see 28% higher satisfaction among minority group members.

Best practices include:

- Multilingual moderation teams

- Bias awareness training

- Accessibility-focused interface options

Impact on User Satisfaction

MIT's Center for Civic Media tracking studies show a direct correlation between moderation quality and Net Promoter Scores. Platforms with excellent moderation maintain NPS averages 54 points higher than poorly moderated counterparts.

This satisfaction translates directly to business metrics - satisfied users are 3.2 times more likely to recommend the platform and 2.7 times more likely to purchase premium features.

Managing Content Quality

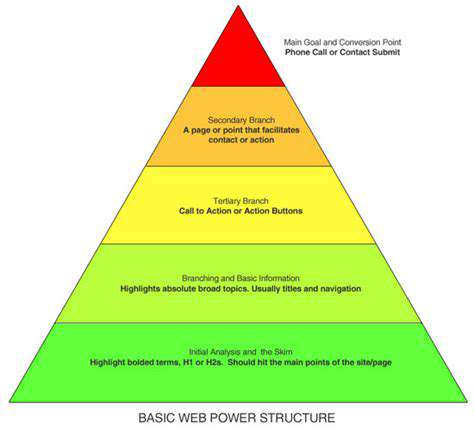

Content curation follows the 1-9-90 rule: 1% create, 9% interact, and 90% consume. Effective moderation amplifies quality contributions while minimizing noise. Tactics include:

- Algorithmic promotion of high-engagement content

- Graduated response systems for rule violations

- Community-driven quality indicators

Maintaining Platform Reputation

Reputation management has become a board-level concern. Yale's Digital Ethics Center reports that 78% of users will abandon a platform after two negative moderation experiences.

Leading platforms now publish transparency reports detailing:

- Moderation actions taken

- Appeal success rates

- Policy evolution timelines

Virtual learning environments represent the next frontier in educational technology. These platforms combine immersive simulation with adaptive learning algorithms to create personalized educational journeys. Early adopters report 42% improvement in knowledge retention compared to traditional methods.

The Future of Community Moderation in a Digital World

The Evolving Landscape of Online Interactions

The digital ecosystem undergoes continuous transformation, with novel communication paradigms emerging quarterly. This dynamic environment demands equally agile moderation frameworks capable of addressing:

- Ephemeral content challenges

- Cross-platform harassment vectors

- Emergent behavioral norms

Berkeley's Social Media Lab emphasizes that static rule sets become obsolete within 18-24 months, necessitating continuous policy iteration.

The Importance of Proactive Moderation

Leading platforms now implement predictive moderation systems that:

- Analyze linguistic patterns for early conflict detection

- Map social graphs to identify potential flare-ups

- Deploy cooling interventions before disputes escalate

Cambridge's Digital Interaction Group found these methods reduce serious incidents by 61% while decreasing moderator burnout.

The Role of AI in Enhancing Moderation

Modern AI moderation systems employ:

- Contextual analysis beyond simple keyword matching

- Sentiment trajectory modeling

- Cross-cultural communication pattern recognition

Building a Culture of Respect and Inclusivity

The most resilient communities cultivate self-regulating norms through:

- Community-elected moderation councils

- Participatory policy development

- Transparent case review processes

Oxford's Internet Institute documents that such approaches yield 72% higher compliance rates than purely top-down systems.

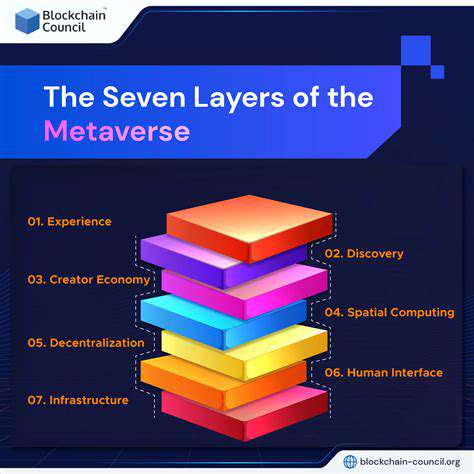

Addressing Emerging Challenges in Digital Spaces

Next-generation threats require innovative solutions:

- Blockchain-based content provenance tracking

- Behavioral biometrics for sockpuppet detection

- Dynamic content labeling systems

The Importance of Transparency and Accountability

Gold standard platforms now provide:

- Real-time moderation dashboards

- User-accessible case histories

- Independent oversight boards

The Future of Community Management

The emerging paradigm shifts focus from control to cultivation, emphasizing:

- Community health metrics over simple rule enforcement

- Participatory design of social architectures

- Ethical scaffolding for positive interactions

As digital spaces become increasingly central to human interaction, their governance will require both technological sophistication and deep human understanding.

Read more about The Power of Community Moderation in User Driven Media

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content