The Ethical Considerations of AI Generated Content Ownership

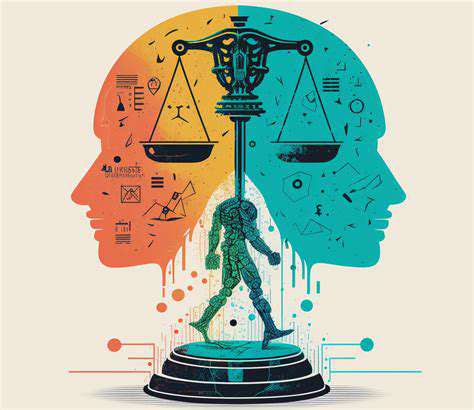

What constitutes originality in this new paradigm? If an AI remixes existing works with minimal human curation, should the output deserve copyright protection? These dilemmas demand immediate attention before legal precedents solidify in ways that might undervalue human creative labor.

Ethical Implications for Copyright and Ownership

Current copyright frameworks, designed for purely human creations, strain under the weight of AI-generated content. We're witnessing a fundamental mismatch between 20th-century laws and 21st-century creative tools. Consider the photographer who uses AI to enhance images—does the software company deserve partial royalties? Should algorithms that produce patentable inventions be listed as co-inventors? These questions grow more urgent as AI systems demonstrate increasing autonomy in their creative outputs.

The Future of Authorship and Intellectual Property

Adapting to this shifting landscape requires proactive measures across multiple disciplines. Legal scholars must draft new frameworks that distinguish between human-led and algorithm-driven creation. Creative industries should establish best practices for disclosing AI involvement in works. Most crucially, we need inclusive dialogues that bring together artists, technologists, and policymakers to preserve creative integrity while embracing technological progress. The solution likely lies in graduated systems that recognize varying degrees of human and machine contribution.

The Role of Human Input and Algorithmic Contribution

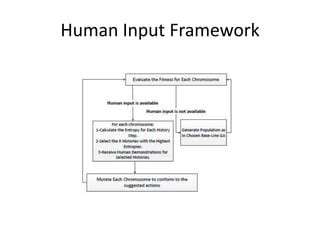

The Importance of Human Oversight in Algorithmic Systems

Despite their impressive capabilities, AI systems inherit the biases and limitations of their training data. Human oversight acts as the essential safeguard, catching errors that might otherwise perpetuate discrimination or misinformation. In critical applications like medical diagnosis or legal analysis, this human check prevents algorithmic overreach. The recent controversy around AI-generated legal briefs containing fabricated case law underscores why human verification remains irreplaceable.

Moreover, algorithms can't adapt to cultural shifts without human guidance. A sentiment analysis tool trained on 2020 social media data would likely misinterpret today's evolving linguistic norms. Continuous human supervision ensures these systems remain relevant and responsible as societal contexts change.

The Role of Human Input in Algorithm Development and Refinement

Effective algorithm design requires more than technical expertise—it demands domain knowledge that only humans possess. Medical AI developers collaborate with doctors to understand diagnostic nuances; educational software designers work with teachers to capture pedagogical subtleties. This human insight transforms generic algorithms into specialized tools that address real-world complexities.

The refinement process particularly benefits from human judgment. While metrics can measure an algorithm's accuracy, only human evaluators can assess whether its outputs feel authentic or appropriate. In creative domains especially, qualitative human feedback shapes AI tools into more nuanced collaborators rather than blunt automation instruments.

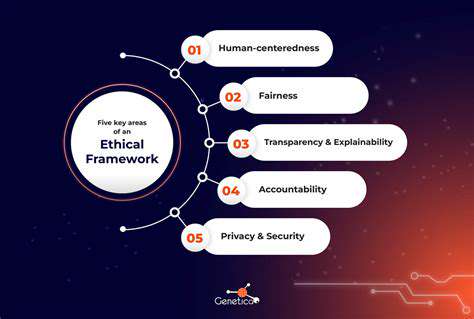

Potential Solutions and Ethical Frameworks

Potential Solutions for Addressing Ethical Concerns

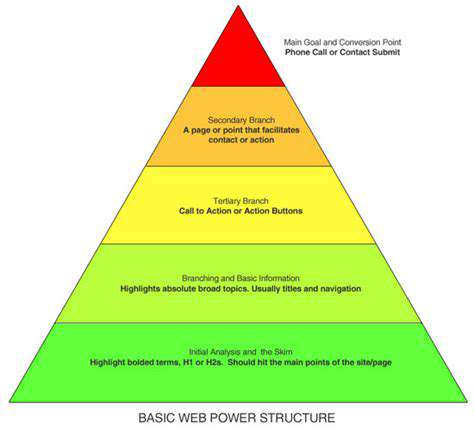

The most promising approach combines adaptive governance models with technical safeguards. Three-tier disclosure systems could specify whether content is human-created, AI-assisted, or AI-generated, giving consumers and regulators necessary transparency. For high-stakes domains like journalism or academic publishing, mandatory disclosure of AI tools used in the creative process would maintain accountability.

Independent ethics boards, comprising technologists, creatives, and legal experts, could establish industry-specific guidelines. Their recommendations might include watermarking AI-generated content, developing standardized contribution metrics, or creating new copyright categories for human-AI collaborations.

Enhancing Transparency and Accountability

Technical solutions like blockchain-based provenance tracking could verify the human/machine contribution ratio in creative works. This technological transparency, combined with clear labeling standards, would empower consumers to make informed judgments about AI-assisted content while protecting creators' rights.

Promoting Ethical Education and Awareness

Curriculum reforms should address AI literacy alongside traditional creative training. Art students might learn to critically evaluate AI tools, while computer science programs incorporate ethics modules on creative ownership. This cross-disciplinary education will produce professionals capable of navigating authorship's new complexities.