Legal Challenges in User Generated Content Moderation

Jurisdictional Variations and Global Challenges

Jurisdictional Differences in User-Generated Content Law

Different countries and regions have varying legal frameworks regarding user-generated content (UGC). This leads to significant jurisdictional differences in how platforms are held accountable for content posted by users. For example, some jurisdictions may have stricter regulations on hate speech or defamation, potentially requiring platforms to proactively monitor and remove such content, while others may prioritize user freedom of expression, leading to less stringent requirements. Understanding these nuances is critical for businesses operating internationally and for users seeking to navigate the legal landscape surrounding UGC.

These differences in legal frameworks can create complexities for multinational companies. They must navigate the varying requirements of each jurisdiction, potentially facing different penalties and liabilities for the same content depending on the location of the user and the audience. This necessitates a thorough understanding of the specific laws in each market and the development of robust content moderation strategies that comply with all applicable regulations.

Global Challenges in Content Moderation

The sheer volume and velocity of user-generated content pose a significant challenge to content moderation efforts. Platforms struggle to effectively identify and address harmful content in a timely manner, potentially leading to legal issues and reputational damage. The constant evolution of technology and the proliferation of new platforms and social media trends further exacerbate this challenge.

The Impact of AI on Content Moderation and Legal Frameworks

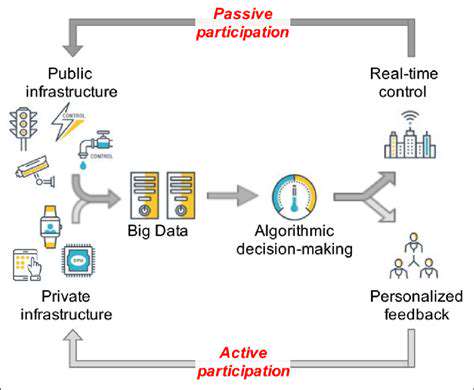

Artificial intelligence (AI) is increasingly being used to automate content moderation tasks. This can significantly increase the speed and scale at which platforms can review UGC. However, the use of AI raises concerns about potential biases in algorithms and the potential for misidentification of content, creating new legal challenges surrounding algorithmic fairness and accountability. Questions arise about the liability of platforms when AI systems make errors, impacting both users and the platforms themselves.

Furthermore, the development of new AI tools requires careful consideration of their impact on free speech and privacy rights. Finding a balance between efficient content moderation and protecting fundamental rights is a significant global challenge that requires ongoing dialogue and adaptation of legal frameworks.

International Cooperation and Harmonization of Legal Standards

Global challenges surrounding UGC require international cooperation to establish harmonized legal standards. This would provide clarity and consistency in how different jurisdictions approach these issues. International organizations and legal experts must collaborate to develop shared guidelines and principles that respect fundamental rights while addressing harmful content effectively. This process will be complex and require ongoing dialogue and compromise.

The Role of Platforms in Combating Harmful Content

Social media platforms have a significant responsibility in combating harmful content, from hate speech and misinformation to illegal activities and harassment. Developing transparent and effective content moderation policies is crucial, not only for maintaining a safe online environment but also for complying with legal requirements. Platforms must invest in resources, training, and technology to detect and address harmful content proactively. This includes collaboration with law enforcement and civil society organizations to develop a comprehensive approach to identifying and removing potentially harmful content.

The Future of UGC and Legal Frameworks

The future of user-generated content is inextricably linked to the evolution of legal frameworks. Technological advancements, changing societal norms, and the need for global cooperation will continue to shape the legal landscape. Platforms must adapt their policies and procedures to address emerging challenges effectively and transparently. This necessitates ongoing dialogue among legal experts, policymakers, and technology developers to ensure that the legal frameworks surrounding UGC remain relevant and effective in the face of technological change and evolving societal values.

Content Moderation and AI

Defining Content Moderation in the Digital Age

Content moderation, in the context of user-generated content, encompasses the processes and systems used to review, filter, and potentially remove content that violates community guidelines or platform policies. This involves a complex balancing act between upholding platform values, ensuring a positive user experience, and respecting freedom of expression. The rise of social media platforms and online forums has dramatically increased the volume of content needing moderation, creating significant challenges for platforms and legal systems alike.

Platforms must carefully consider the potential consequences of their moderation policies, as these policies can impact users' rights to free speech and expression. The lines between acceptable and unacceptable content often blur, requiring careful consideration of cultural nuances and diverse perspectives.

AI's Role in Content Moderation

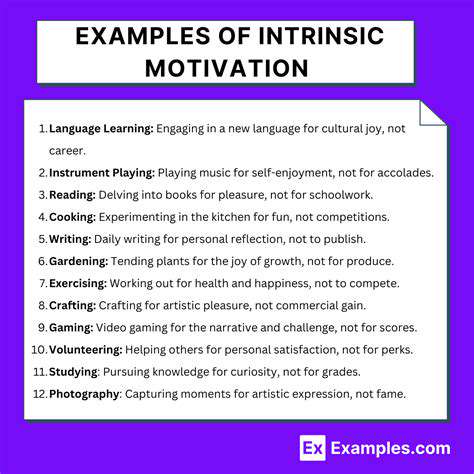

Artificial intelligence (AI) has emerged as a significant tool in content moderation, automating the initial screening process. AI algorithms can identify patterns and characteristics associated with harmful content, allowing human moderators to focus on more complex or nuanced cases. This automation offers the potential to increase efficiency and scale but also raises concerns about bias and the potential for misidentification.

However, relying solely on AI for content moderation is problematic. AI systems can perpetuate existing biases present in the training data, potentially leading to discriminatory outcomes. Human oversight and intervention are crucial to ensure fairness and accuracy.

Legal Frameworks and Content Moderation

Legal frameworks around content moderation vary significantly across jurisdictions. Some countries have stricter regulations regarding the removal of specific types of content, while others adopt a more nuanced approach, prioritizing user rights and freedom of expression. These differences create significant challenges for global platforms aiming to operate consistently across different markets.

Navigating these diverse legal landscapes requires a deep understanding of local laws and regulations. Platforms must carefully consider the potential legal ramifications of their content moderation policies and practices in each jurisdiction where they operate.

The Challenges of Defining Harmful Content

Determining what constitutes harmful content is a complex and often contentious issue. This definition varies based on cultural contexts, societal norms, and evolving understandings of acceptable behavior online. Platforms must carefully craft their guidelines to encompass a wide range of potential harm while avoiding overbroad restrictions that stifle legitimate expression.

Transparency and Accountability in Content Moderation

Transparency in content moderation practices is crucial to building trust and accountability. Users should have clear understanding of the policies and procedures governing content removal, and platforms should be open about how these systems operate. This transparency can help mitigate concerns about censorship and bias in moderation decisions.

Accountability mechanisms are also essential to ensure that platforms are held responsible for their actions. Clear procedures for appealing moderation decisions and mechanisms for redress should be in place to address user grievances and ensure fairness.

Liability for User-Generated Content

Platforms face significant legal challenges regarding liability for user-generated content. The question of whether platforms are responsible for the content posted by their users is complex and often contested in court. Determining the level of platform involvement and control over the content is key in establishing liability.

This issue becomes even more intricate when dealing with content that incites violence, defamation, or harassment. Platforms must carefully consider their role in preventing harm while respecting users' rights to expression. This includes establishing clear policies and procedures to identify and address such content.

The Future of Content Moderation in the Digital Age

The future of content moderation likely involves a more sophisticated combination of AI tools and human oversight. Continuous improvement in AI algorithms, coupled with robust human review processes, will be crucial in balancing the need for efficiency with the requirement for fairness and accuracy. This evolving landscape requires ongoing dialogue between technology companies, legal experts, and policymakers to ensure responsible and effective content moderation practices.

Ongoing research and development in AI and machine learning will play a critical role in developing more nuanced and sophisticated content moderation systems that can adapt to evolving online threats and challenges. This will necessitate a constant reevaluation of existing policies and procedures to ensure they remain relevant and effective.

Read more about Legal Challenges in User Generated Content Moderation

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content