Ethical AI in Metaverse Entertainment

Bias and Representation in AI-Generated Content

Data Bias in AI-Gene

AI-gene systems, like other machine learning models, are trained on data. If this data reflects existing societal biases, the AI-gene system will likely perpetuate and even amplify those biases. This is a critical issue because biased AI-gene predictions can lead to discriminatory outcomes in areas like healthcare, financial services, and criminal justice. Understanding and mitigating bias in the training data is paramount for building fair and equitable AI-gene models.

For example, if a dataset used to train an AI-gene model for predicting disease risk disproportionately includes data from a specific demographic group, the model may not accurately assess the risk for other groups. This could lead to inaccurate diagnoses and treatment plans, potentially harming individuals from underrepresented groups.

Representation of Diverse Genetic Data

A crucial aspect of fairness in AI-gene systems is ensuring that the training data accurately reflects the diversity of the human genome. Genetic variation exists across different populations, and neglecting this diversity can lead to inaccurate predictions and potentially harmful outcomes. AI-gene models need to be trained on data that encompasses the full spectrum of genetic variation to avoid biased results.

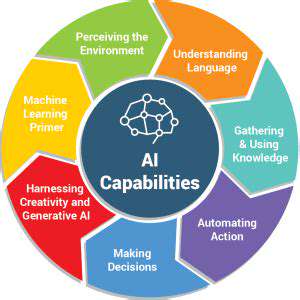

Algorithmic Transparency and Explainability

The black box nature of some AI algorithms can make it difficult to understand how AI-gene systems arrive at their predictions. This lack of transparency hinders the ability to identify and address biases within the algorithm itself. A need for transparency is crucial for building trust and accountability in AI-gene systems, particularly in sensitive applications like medical diagnosis.

Techniques like explainable AI (XAI) are being developed to improve the transparency of AI-gene models, allowing researchers and clinicians to understand the reasoning behind the predictions and identify potential biases.

Ethical Considerations in AI-Gene Development

Developing AI-gene systems raises a complex set of ethical considerations. These include issues of data privacy, informed consent, and equitable access to these technologies. Careful consideration of ethical implications is essential at every stage of the AI-gene development process, from data collection to deployment.

The potential for misuse or unintended consequences of AI-gene systems also needs careful consideration. These systems could have significant impacts on individuals and society, and ethical guidelines are necessary to ensure responsible and beneficial application.

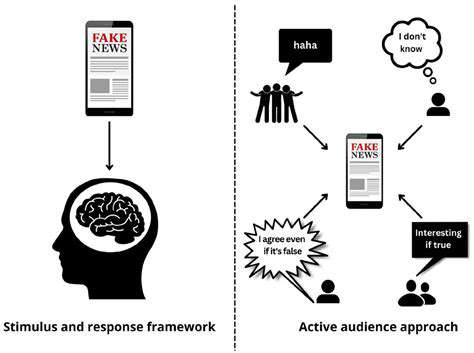

Potential for Bias Amplification

An important concern in AI-gene applications is the potential for bias amplification. This means that not only can biases present in the initial training data be perpetuated, but the model itself can also create new biases through its predictions. These new biases can then be incorporated into subsequent datasets, leading to a dangerous cycle of amplification.

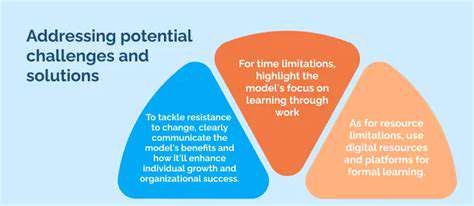

Mitigating Bias in AI-Gene Models

Addressing bias in AI-gene models requires a multifaceted approach. This includes careful data curation to ensure diverse and representative datasets, the development of algorithms that are transparent and explainable, and the establishment of ethical guidelines to ensure responsible development and deployment. By proactively addressing bias at all stages of the AI-gene process, we can create more equitable and reliable systems.

Regular audits and evaluations of AI-gene models are also crucial to detect and correct biases as they emerge, ensuring continuous improvement and fairness.

Addressing the Potential for AI-Driven Manipulation and Addiction

Addressing the Ethical Considerations of AI-Driven Diagnostics

The integration of artificial intelligence (AI) into medical diagnostics presents a wealth of opportunities, but also raises critical ethical concerns. Careful consideration must be given to the potential biases embedded within AI algorithms, ensuring they don't perpetuate existing health disparities. Equitable access to AI-powered diagnostic tools is paramount to avoid exacerbating existing health inequities. This includes ensuring affordability and accessibility for all socioeconomic groups.

The potential for misdiagnosis or overdiagnosis due to AI errors is a significant concern. Robust validation and testing procedures are essential to mitigate this risk. Rigorous oversight and regulatory frameworks are needed to ensure the reliability and safety of these AI-driven diagnostic tools.

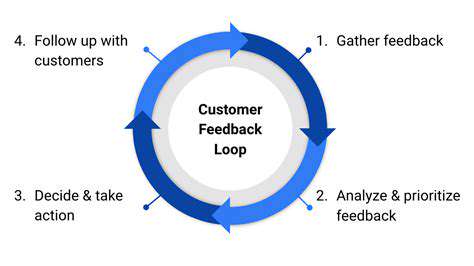

Evaluating the Accuracy and Reliability of AI Diagnostics

The accuracy and reliability of AI-driven diagnostic tools are critical to their effective implementation. Independent validation studies and rigorous testing against established gold standards are essential to assess the performance of these systems and identify potential areas for improvement. This rigorous process will build trust and confidence in AI-driven diagnostics.

Furthermore, ongoing monitoring and evaluation of AI diagnostic tools in real-world settings are necessary. Collecting and analyzing data on their performance in diverse patient populations can help identify areas where adjustments may be required to improve accuracy and reliability. This constant evaluation is vital to the ongoing success of AI in healthcare.

Understanding the Impact on Healthcare Professionals

The integration of AI in diagnostics will undoubtedly transform the role of healthcare professionals. AI tools can augment, rather than replace, human expertise. This transformation will require ongoing education and training for clinicians to effectively utilize and interpret AI-generated insights. This includes training on how to critically evaluate AI outputs and understand their limitations.

Understanding the specific skills needed in the evolving healthcare landscape is crucial. Healthcare professionals will need to develop new competencies in data interpretation and AI-based decision-making to effectively collaborate with these advanced tools. This adaptability is key to the successful integration of AI into clinical practice.

Examining the Role of Data Privacy and Security

The use of AI in diagnostics necessitates careful consideration of data privacy and security. Patient data used to train and validate AI models must be handled with the utmost confidentiality and adherence to strict privacy regulations. Robust security measures are essential to protect sensitive patient information from unauthorized access or breaches.

Exploring the Potential for Bias in AI Algorithms

AI algorithms are trained on data, and if that data reflects existing societal biases, the resulting algorithms may perpetuate and even amplify these biases. Addressing this issue requires careful data curation and algorithm development to mitigate the risk of bias in AI-driven diagnostic tools. This is vital to ensure equitable and unbiased outcomes for all patients.

Addressing the Cost-Effectiveness of AI-Driven Diagnostics

The initial investment in AI-driven diagnostic tools can be significant. Long-term cost-effectiveness needs to be carefully evaluated. Factors such as the cost of maintenance, training, and potential savings in healthcare resources need to be considered to determine the financial viability of this technology in different healthcare settings. This comprehensive approach is essential to ensure the sustainable use of AI in healthcare.

Analyzing the Regulatory Landscape for AI Diagnostics

A robust regulatory framework is essential to ensure the safety, efficacy, and ethical use of AI-driven diagnostic tools. This framework should balance innovation with appropriate safeguards to protect patients and ensure the reliability of these technologies. Clear guidelines and standards are needed for the development, validation, and deployment of AI diagnostic tools in clinical practice. This proactive approach will help ensure the responsible use of these powerful tools.

Read more about Ethical AI in Metaverse Entertainment

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content