The Ethics of AI in Content Attribution

The Rise of AI-Powered Content Creation

Defining AI-Powered Content Creation

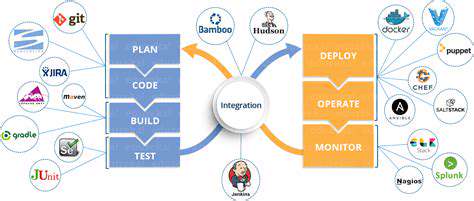

Modern content creation has been transformed by tools powered by artificial intelligence, which now offer capabilities spanning from simple text generation to intricate multimedia production. These systems utilize advanced algorithms trained on extensive datasets encompassing text, code, and visual materials to create original outputs. Grasping the subtleties of these technologies—including both their impressive capabilities and inherent constraints—is essential for assessing their influence on today's content landscape.

The applications range from producing concise summaries and reworded text to composing full-length articles and creative writing pieces. This adaptability prompts important discussions about human creativity's evolving role and the potential for improper use across different sectors.

The Impact on Content Attribution

A central ethical quandary in AI-generated content involves determining proper attribution. When artificial intelligence produces creative work, establishing responsibility becomes complex. Should credit go to the user who provided the prompt, the AI system itself, or the developers behind the technology? This ambiguity creates challenges regarding plagiarism and makes it difficult to assign accountability for distributed content.

Transparency and Disclosure in AI-Generated Content

Addressing attribution concerns requires clear transparency about AI's involvement in content production. Digital platforms should explicitly disclose when content originates from artificial intelligence. Such openness enables consumers to make educated decisions about the material they encounter while fostering greater trust in digital information sources.

Frank acknowledgment of AI's role also encourages better understanding of these systems' limitations and potential built-in prejudices.

Addressing Potential Misinformation and Bias

Since AI models learn from massive datasets that may contain societal biases, they can sometimes produce content reinforcing stereotypes or spreading inaccurate information. Implementing processes to detect and reduce these biases in machine-generated content is vital for maintaining factual accuracy and equitable representation.

The Role of Human Oversight and Collaboration

While automation through AI offers efficiency, human judgment remains indispensable. Content professionals can critically assess AI outputs, identify possible flaws or distortions, and ensure alignment with ethical standards and objectives. A synergistic partnership between human expertise and artificial intelligence leads to more sophisticated and thoughtful creative work.

Copyright and Intellectual Property Issues

AI-assisted content creation introduces complicated questions about ownership rights. Determining copyright for machine-generated material—whether it belongs to the user, the AI developer, or requires shared recognition—demands clear legal structures to prevent conflicts over usage and attribution.

The Future of Content Creation and AI

Content production will continue evolving through deeper AI integration. As these technologies advance, their influence will expand across journalism, advertising, and media industries. Constructive discussions and ethical guidelines will be crucial for responsibly managing both the challenges and opportunities presented by AI in creative fields.

The Dilemma of Transparency and Disclosure

Defining Transparency in AI-Generated Content

True transparency regarding AI-generated content extends beyond merely identifying the technology involved. It requires insight into the training methods, data sources, and potential algorithmic biases. Simply labeling content as AI-created proves insufficient—understanding specific system parameters and constraints is necessary for establishing credibility and responsibility in machine-produced materials.

For instance, an AI trained on demographic-skewed data might unconsciously replicate those biases in its outputs. Genuinely transparent systems actively recognize and mitigate these potential distortions during development.

The Role of Disclosure in Content Attribution

Disclosure represents the practical application of transparency, requiring explicit statements about AI's involvement in creative processes. This includes specifying whether content was fully or partially machine-generated and identifying the particular AI models employed. Beyond ethical obligations, such clarity protects consumers and preserves intellectual property rights.

Comprehensive disclosure enables informed content consumption, particularly in fields like journalism or academia where originality matters. It helps audiences recognize potential limitations in AI-generated material and prevents misinterpretations.

The Challenges of Implementing Transparency

Establishing effective transparency measures for AI content faces substantial obstacles. Many AI systems operate as complex black boxes, making their decision processes difficult to interpret. The enormous training datasets can obscure bias origins, while proprietary concerns often limit disclosure of development details.

The absence of universal standards creates another significant barrier, resulting in inconsistent transparency practices across different platforms and industries that may confuse consumers.

Balancing Transparency with Commercial Interests

Reconciling transparency requirements with business objectives presents an ongoing challenge. Companies may resist full disclosure about AI usage to protect competitive advantages or market positions. This situation calls for balanced solutions that encourage openness while respecting legitimate commercial considerations.

The User's Perspective on Transparency and Disclosure

Digital audiences increasingly demand greater clarity about content origins as awareness grows regarding AI's role in media production. Consumers seek authentic, accountable information sources and want to understand how artificial intelligence contributes to the material they encounter daily.

The Ethical Implications of Obfuscation

Deliberately concealing AI's creative participation raises serious ethical issues. Such practices can deceive audiences, damage institutional credibility, and compromise intellectual property standards—particularly in fields valuing original thought and academic honesty.

The Future of Content Attribution in the AI Era

Developing responsible content attribution standards for the AI age requires cooperation among industry leaders, regulators, and academics. Establishing clear transparency guidelines, developing verifiable authentication methods, and researching more interpretable AI models will all contribute to ethical management of machine-generated content.

Read more about The Ethics of AI in Content Attribution

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content