AI Takes the Stage: The Rise of AI Generated Content

Navigating the Ethical Implications

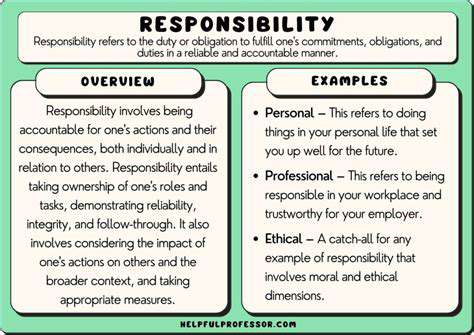

Ethical Considerations in AI-Generated Content

The rapid advancement of AI technology, particularly in the realm of content generation, brings forth a complex web of ethical considerations. We must grapple with the potential for misuse, the blurring lines between human and machine creativity, and the implications for authorship and intellectual property. Examining these issues critically is crucial to ensuring responsible development and deployment of AI tools that produce creative content.

Defining originality and authorship in an AI-driven world becomes a significant challenge. Who owns the copyright when a machine learns from vast datasets to generate novel content? How do we distinguish between genuine human creativity and AI-emulated expressions? These questions demand careful consideration and potential legislative revisions to address the evolving landscape of intellectual property rights in the digital age.

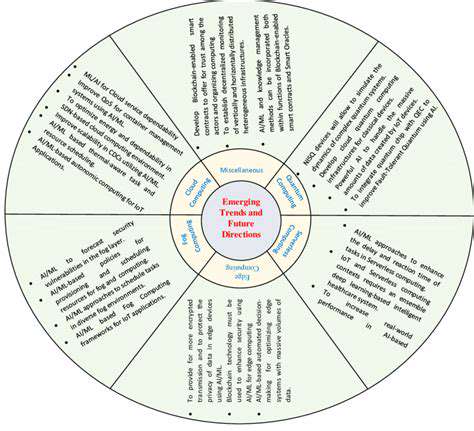

Bias and Representation in AI-Generated Content

AI models are trained on vast datasets, which often reflect existing societal biases. Consequently, AI-generated content can unintentionally perpetuate and amplify these biases, potentially leading to harmful stereotypes or misrepresentations of diverse groups. This is a serious concern, particularly when such content is used in media, marketing, or educational materials. Addressing this bias requires careful curation of training data and the development of algorithms that actively mitigate the risk of perpetuating harmful stereotypes.

Furthermore, the lack of diversity in the datasets used to train AI models can lead to a skewed representation of different viewpoints and experiences. This can result in AI-generated content that fails to capture the complexity and richness of human narratives, potentially excluding and marginalizing certain communities. Efforts to ensure representativeness in training data are essential to prevent perpetuation of existing inequalities.

Transparency and Accountability in AI Content Creation

Understanding how AI models arrive at their outputs is crucial for building trust and accountability. The black box nature of some AI algorithms can make it difficult to trace the origin of generated content and identify potential errors or biases. This lack of transparency creates challenges in assessing the ethical implications and taking responsibility for the content generated. Promoting explainable AI (XAI) techniques is critical to ensuring that AI systems are not just effective but also accountable and understandable.

The Impact on Human Creativity and Jobs

The rise of AI-generated content raises concerns about the future of human creativity and the potential displacement of human workers in creative industries. While AI can augment human creativity and accelerate certain processes, it also poses the risk of deskilling human creative professionals. Maintaining a balance between AI-driven automation and human creativity is essential. This requires investment in reskilling and upskilling programs to help individuals adapt to the changing demands of the creative landscape, as well as exploring new avenues for human-AI collaboration.

Read more about AI Takes the Stage: The Rise of AI Generated Content

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content