Your Story, Your Way: The Rise of User Driven Media

The magic lies in immediacy. When a writer publishes on Medium, they don't wait months for editor approval—readers vote with claps within hours. This instant validation (or critique) creates a Darwinian environment where only the most resonant stories survive. Traditional gatekeepers relied on intuition; algorithmic platforms thrive on cold, hard engagement metrics.

The Impact on Narrative Diversity and Representation

Mainstream media's diversity issues stemmed from homogeneous decision-makers. User-generated platforms shatter those filters. Migrant workers document their struggles through vlogs. LGBTQ+ creators build audiences with authentic coming-of-age stories. These narratives aren't filtered through studio executives' risk-averse lenses—they're raw, unfiltered, and powerfully specific.

The ripple effects are cultural. When marginalized groups control their own storytelling, stereotypes crumble. A Navajo filmmaker's YouTube series about reservation life educates millions. A disabled gamer's Twitch stream reshapes perceptions of accessibility. This isn't just representation—it's narrative sovereignty.

The Future of Storytelling: Collaborative and Personalized Experiences

Tomorrow's stories will resemble choose-your-own-adventure books on steroids. Imagine interactive novels where readers vote on plot twists, or AR experiences that adapt narratives based on your location. The line between creator and audience will blur into co-creation. Platforms like Minecraft already demonstrate this—players don't just inhabit worlds; they build mythology collectively.

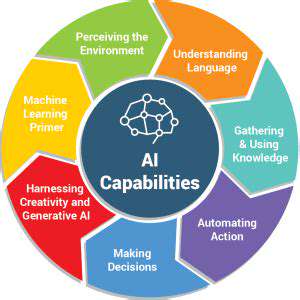

Emerging AI tools will turbocharge this trend. Picture language models helping fans extend beloved franchises legally, or generating personalized story variants tailored to individual readers' preferences. The future belongs to stories that breathe, evolve, and invite participation at every turn.

The Power of Personalization and Connection

Personalized Learning Experiences

Industrial-era education treated minds like assembly lines. Modern pedagogy recognizes that no two brains process information identically. Adaptive learning platforms now map cognitive fingerprints—tracking whether a student grasps concepts better through visual metaphors or kinetic activities. Some schools even adjust classroom lighting and noise levels based on neurological profiles.

The results are staggering. In pilot programs, personalized math curricula reduced achievement gaps by 45%. When lessons align with biological learning rhythms, retention soars. This isn't about pampering students—it's about respecting neurodiversity. The child who struggles with silent reading might excel with audiobooks; the kinesthetic learner thrives when conjugating verbs through dance.

Customizable Content Delivery

Netflix's recommendation engine revolutionized entertainment. Educational platforms now employ similar AI to serve knowledge. Duolingo's algorithms detect when users struggle with French subjunctives and serve targeted exercises. Coursera lets learners toggle between video lectures, interactive simulations, and text summaries. This isn't just convenience—it's cognitive optimization.

Even corporate training embraces this shift. Sales teams at Shopify access micro-lessons tailored to deal stages they're negotiating. Hospital staff receive infection-control tutorials formatted for their preferred learning modality. When information conforms to the learner—not vice versa—competency develops faster.

Tailored Feedback Mechanisms

Traditional grading systems are autopsy reports—post-mortems on expired learning opportunities. Modern feedback resembles GPS navigation: real-time course corrections. Coding platforms like Replit instantly flag logical errors with suggested fixes. Language apps analyze pronunciation phoneme-by-phoneme. This granular feedback creates tighter learn-adjust loops.

The psychological impact matters. Stanford studies show that game-like feedback (e.g., Great progress! Try adjusting X) motivates 3x more than letter grades. When critiques feel collaborative rather than judgmental, learners take risks and grow.

Adaptive Assessment Strategies

Standardized tests measure test-taking skills. Adaptive assessments measure actual competency. Medical students at Johns Hopkins now take exams where each correct answer triggers a harder question, while mistakes prompt targeted review. The system pinpoints knowledge gaps with surgical precision.

This approach exposes the flaws in one-size-fits-all testing. Why should a future engineer's chemistry grade suffer because they struggle with timed multiple-choice? Adaptive assessments measure mastery on the learner's terms, unlocking fairer evaluations.

Personalized Support Systems

Harvard's Bureau of Study Counsel found that mentorship matching increases graduation rates by 28%. Modern systems go beyond human matching—AI tutors like Carnegie Learning's MATHia provide 24/7 support with infinite patience. Chatbots trained on therapeutic techniques offer mental health check-ins.

The most innovative programs blend human and digital support. Georgia State's chatbot Pounce handles routine queries, freeing advisors for complex cases. When a student texts about financial aid, Pounce responds instantly—but flags housing insecurity concerns to human staff. This hybrid model scales care without losing warmth.

Challenges and Considerations

Navigating the User-Generated Content Landscape

Open platforms are double-edged swords. While they empower creators, they also host misinformation, hate speech, and plagiarism. Moderation at scale remains the Achilles' heel—Facebook employs 15,000 moderators yet struggles with harmful content. Emerging solutions include:

- Blockchain-based content verification

- AI flagging systems trained on cultural context

- Trust score systems rewarding reliable creators

Maintaining Trust and Credibility

The 2023 Edelman Trust Barometer reveals that 67% of users distrust algorithmic content recommendations. Rebuilding confidence requires radical transparency. Some platforms now display why you're seeing this explanations beside posts. Others implement public moderation logs—Wikipedia-style edit histories for content decisions.

Balancing User Empowerment and Platform Control

Discord's community-led moderation model offers a blueprint. Instead of top-down rules, empowered user groups enforce context-appropriate standards. Gaming clans might tolerate edgy humor; educational servers maintain stricter decorum. This subcultural self-governance respects niche norms while upholding core policies.

Addressing Copyright and Intellectual Property Concerns

NFT marketplaces demonstrate potential solutions. When digital artist Beeple sold a collage for $69 million, smart contracts ensured he received royalties on secondary sales. Similar systems could protect UGC creators—imagine TikTok automatically licensing background music or compensating meme originators. The technology exists; implementation awaits legal frameworks.