Real Time AI Powered Lip Syncing for Characters

Imagine an animation studio where characters' lips move in perfect harmony with their voices without endless manual adjustments. That's the reality today, thanks to AI-powered lip-syncing. This groundbreaking technology is changing how animators work, letting them concentrate on bringing characters to life through expressions and body language rather than getting bogged down in frame-by-frame synchronization. The immediate visual feedback when audio is applied creates a smoother workflow and cuts production timelines dramatically.

Not long ago, achieving convincing lip movements meant countless hours of meticulous work. Animators had to carefully align each syllable with corresponding mouth shapes – a process prone to human error and inconsistencies. Now, AI handles this tedious task automatically, delivering consistent results across entire projects while freeing up creative energy for more important aspects of animation.

How AI Deciphers Human Speech

The magic happens through sophisticated machine learning models trained on millions of speech samples. These systems don't just recognize words – they understand how different sounds, regional accents, and speech patterns physically manifest in mouth movements. By analyzing subtle variations in pronunciation, the AI generates corresponding lip animations that look astonishingly natural. This deep understanding of phonetics allows animated characters to speak with the same organic fluidity as real people.

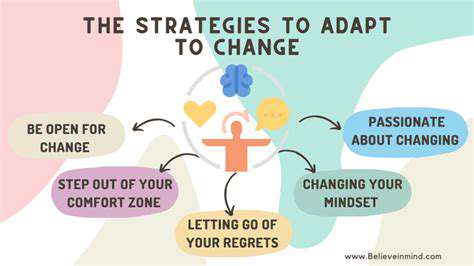

Transforming Character Animation Workflows

The advantages for animation teams are substantial. In an industry where deadlines loom large, AI lip-syncing acts as a powerful time-saver. What used to take days can now be accomplished in hours, freeing animators to focus on character personality and emotional expression. This efficiency boost enables rapid experimentation with different performance styles and quicker iteration cycles.

Beyond speed, the technology delivers professional-grade results consistently. Characters appear more lifelike when their speech animations flow smoothly from one sound to the next. This attention to detail enhances audience engagement, making animated performances feel genuine rather than artificial.

Current Limitations and Tomorrow's Possibilities

While impressive, the technology still faces hurdles. Capturing individual speaking quirks and handling poor-quality audio recordings remain areas for improvement. Researchers are developing more advanced models that can adapt to these variables, promising even greater accuracy in future iterations.

The next frontier involves combining AI lip-sync with other animation technologies. Imagine motion-captured facial performances enhanced by AI that automatically refines lip movements in real-time. Such integrations could push animated realism to unprecedented levels while preserving artists' creative vision.

The Science Behind Convincing Lip Movements

What makes AI-driven lip-sync so convincing? The systems don't just match sounds to mouth shapes – they replicate the complex mechanics of human speech. Algorithms analyze how lips protrude, how the tongue positions itself, and how jaw movements change during conversation. This biomechanical approach results in animations that mirror natural speech patterns with scientific precision.

The result goes beyond technical accuracy – it creates emotional authenticity. When characters speak with properly synchronized facial movements, audiences subconsciously accept them as living entities. This subtle but powerful effect makes stories more immersive and characters more relatable, proving that sometimes the smallest details have the biggest impact.