The Role of Tokens in User Driven Media Engagement

Tokenized Incentives: A Powerful Driver

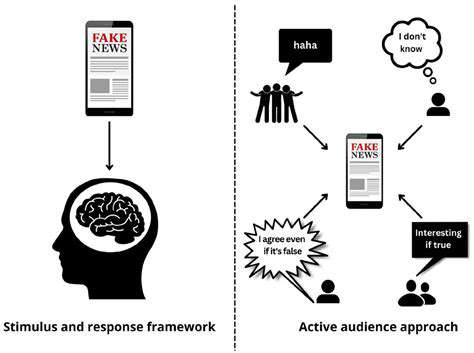

Encouraging active involvement in digital platforms—whether forums, games, or social networks—relies heavily on crafting compelling reward systems. Digital tokens serve as versatile tools in this space, functioning as concrete representations of value exchanged for user engagement. These rewards might be earned through diverse actions like contributing to discussions, completing platform tasks, or simply maintaining regular activity.

The perceived worth of these tokens, whether tied to specific platform utilities or driven by community demand, significantly influences their motivational power. Platform designers must grasp this relationship to build effective incentive structures.

Gamification and Token-Based Rewards

Token systems amplify the effectiveness of gamification strategies by providing measurable achievements. Platforms can distribute tokens for accomplishing defined objectives, reaching milestones, or participating in community activities, creating a structured progression system that encourages ongoing involvement.

For example, users might accumulate tokens by offering valuable insights in discussions, participating in collaborative projects, or maintaining consistent platform engagement. These digital assets could then be redeemed for special features, premium content, or other desirable benefits.

Community Building and Token-Driven Engagement

Digital tokens prove particularly effective in cultivating vibrant online communities. By recognizing contributions and fostering shared ownership, token systems motivate users to actively participate and interact. This approach helps develop strong community bonds and user loyalty.

The evolving nature of token economies sustains long-term engagement as participants continuously seek meaningful ways to earn and utilize their digital assets.

Tokenomics and Incentive Structure Design

Crafting successful token-based incentives requires careful economic planning. The system's sustainability depends on strategic decisions regarding token availability, distribution methods, and inherent value propositions. Understanding these economic principles is essential for creating enduring engagement frameworks.

A token's desirability stems from both its scarcity and practical applications. Thoughtful tokenomics ensures ongoing user interest by maintaining the asset's value within the platform ecosystem.

Token Utility and Practical Application

Effective token systems must offer genuine user benefits beyond simple participation rewards. Tokens should unlock tangible advantages like exclusive access, special privileges, or unique services to maintain their appeal.

For instance, content creation platforms might reward contributors with tokens exchangeable for enhanced features or premium memberships. The practical usefulness of these digital assets directly affects their motivational impact.

Bridging the Gap Between Value and Participation

Token systems serve as critical connectors between user contributions and platform rewards. Well-designed token mechanisms transform participation into concrete benefits, making engagement more appealing and worthwhile.

Aligning token value with user actions helps platforms develop more active and invested communities.

Measuring the Impact of Tokenized Incentives

Assessing token system effectiveness requires tracking key performance indicators. Metrics like engagement levels, participation rates, and community health indicators provide valuable insights into program success.

Analyzing how token distribution and functionality influence these metrics allows for continuous refinement of incentive structures.

The Future of Tokenization in Media: Challenges and Opportunities

Tokenization's Impact on Natural Language Processing

The evolution of text segmentation technology remains deeply connected to NLP advancements. Breaking down language into meaningful units forms the foundation for crucial NLP applications, ranging from emotion detection to automated translation. As language models grow more sophisticated, demand increases for refined text segmentation approaches, likely spurring innovation in context-sensitive methods.

Different segmentation techniques address various needs—some preserve sentence structure while others emphasize semantic understanding. Future solutions will probably combine multiple approaches customized for specific applications.

The Role of Context in Modern Tokenization

Contemporary text segmentation transcends simple word separation. Accurate language interpretation now demands awareness of surrounding context, facilitated by advanced machine learning and expansive language models. This shift enables tokens to represent larger, more meaningful language chunks when appropriate.

Consider the expression run of the mill. Basic segmentation would isolate each word, while context-aware processing would recognize the entire phrase as a single conceptual unit.

Adapting to New Languages and Scripts

Text segmentation technology must expand beyond European languages to accommodate global linguistic diversity. Effective processing requires specialized approaches for agglutinative languages, non-Roman writing systems, and varied grammatical structures as NLP applications reach worldwide audiences.

The Future of Tokenization in Medical Applications

Healthcare applications particularly benefit from advanced text segmentation. Improved methods enable better extraction of clinical information, pattern recognition in medical records, and enhanced diagnostic tools. These advancements could lead to more precise identification of symptoms and conditions from patient records, potentially improving healthcare delivery.

Read more about The Role of Tokens in User Driven Media Engagement

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content