The Role of Decentralized Finance (DeFi) in UGC

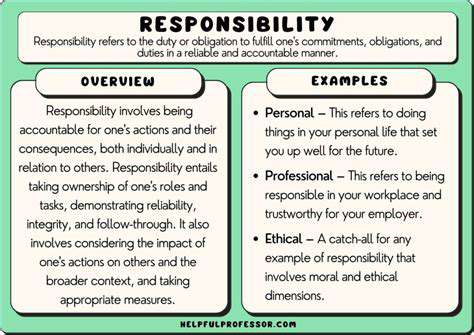

Understanding UGC Tokenization

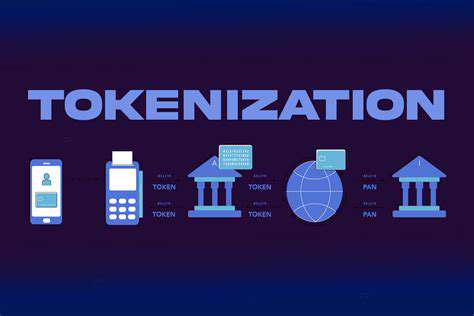

User-generated content (UGC) has become a dominant force in the digital landscape, spanning social media, forums, and multimedia platforms. The sheer volume of this content presents both opportunities for monetization and challenges in managing unstructured data. Tokenization transforms this complexity by dissecting UGC into discrete, analyzable units called tokens. These tokens serve as the foundation for advanced data processing and value extraction.

This approach enables sophisticated analysis of massive datasets, powering applications like sentiment tracking, thematic categorization, and personalized content delivery systems. By isolating key linguistic elements, businesses can decode user behavior patterns with remarkable precision. Such granular insights become indispensable for crafting hyper-targeted marketing campaigns and optimizing user engagement strategies.

Monetization Strategies Using Tokenized UGC

Tokenized UGC unlocks multiple revenue generation pathways. Sentiment-driven advertising represents one of the most lucrative applications, where brands can identify and capitalize on user enthusiasm for specific products or services. When algorithms detect positive mentions of a product category, marketers can deliver perfectly timed promotional content that converts interest into sales.

The technology also revolutionizes content personalization. By mapping individual user preferences through their tokenized interactions, platforms can construct unique content journeys that boost session duration and user satisfaction. This tailored approach not only enhances the user experience but also creates multiple monetization touchpoints throughout the engagement cycle.

Challenges in Tokenizing UGC

Despite its potential, UGC tokenization encounters significant technical hurdles. The organic nature of user-created content introduces variables like regional slang, emoji usage, and grammatical variations that challenge conventional parsing algorithms. Maintaining contextual accuracy while processing these linguistic nuances requires increasingly sophisticated computational models that can interpret rather than simply parse human expression.

The Role of Natural Language Processing (NLP)

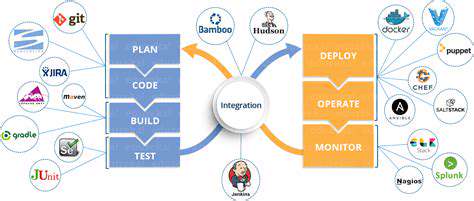

Advanced NLP systems have become the backbone of effective UGC analysis. These systems undergo extensive training on diverse language corpora to develop semantic understanding capabilities. The ability to detect subtle communication elements like irony, cultural references, and implied meaning separates basic tokenization from truly valuable content analysis. As these algorithms improve, they enable more accurate classification of UGC for commercial applications.

Future Implications of UGC Tokenization

The evolution of tokenization technology promises transformative changes for digital platforms. Next-generation systems will enable real-time analysis of user sentiment, allowing businesses to dynamically adjust their engagement strategies. Emerging applications may include automated customer service solutions that leverage tokenized interaction histories and AI-powered content creation tools that respond to detected user preferences.

While mainstream analysis focuses on conventional metrics, there's a wealth of overlooked behavioral indicators that offer deeper understanding. Standard measurements provide useful baselines but fail to capture the full spectrum of user motivation. Incorporating these alternative dimensions yields richer behavioral profiles, enabling more nuanced platform design and community management approaches.

DeFi-Enabled Incentives and Rewards for UGC Creation

Incentivizing User-Generated Content (UGC) in Decentralized Finance (DeFi)

Decentralized finance ecosystems increasingly recognize UGC as a catalyst for platform vitality. By implementing thoughtful reward structures, DeFi projects can cultivate active participant communities that collectively enhance platform value. This strategy not only attracts new users but also encourages existing members to contribute higher-quality content that advances the ecosystem's development.

Types of DeFi-Based Incentives

DeFi platforms offer diverse incentive mechanisms for content creators, ranging from native token distributions to governance participation rights. Some systems incorporate non-fungible tokens (NFTs) that confer special privileges or represent unique digital assets. Additionally, platforms may provide tiered access to premium features based on contribution quality and consistency.

The reward calculus often considers multiple content dimensions - from technical depth to engagement metrics. Systems might weight factors like originality, educational value, or demonstrated expertise when determining compensation levels. This multi-criteria approach aims to recognize and encourage substantive contributions that meaningfully advance community knowledge.

The Importance of Transparency and Reward Fairness

Successful incentive systems prioritize clear communication of reward mechanisms. Users require unambiguous understanding of how their contributions translate into compensation to maintain trust in the ecosystem. Opaque algorithms or shifting criteria can rapidly degrade community confidence and participation rates.

Measuring the Impact of DeFi-Based Incentives

Evaluating incentive effectiveness demands comprehensive analytics that transcend basic engagement metrics. Sophisticated platforms track content propagation patterns, qualitative community feedback, and downstream conversion metrics. The ultimate measure of success lies in the platform's ability to convert content engagement into sustainable growth and value creation.

The Future of DeFi Incentives and UGC

Emerging technologies will reshape DeFi incentive models, with AI-powered content evaluation systems enabling more nuanced reward distribution. Novel tokenomic structures may emerge that better align short-term contributions with long-term platform success. The intersection of decentralized identity solutions and UGC could create persistent reputation systems that carry across multiple platforms.

Challenges and Future Directions

Scalability and Transaction Costs

DeFi platforms face infrastructure challenges when scaling to accommodate massive UGC volumes. Network congestion and high gas fees can disproportionately affect smaller creators, potentially distorting participation economics. Layer-2 solutions and alternative consensus mechanisms offer promising avenues for maintaining accessibility while supporting growth.

Regulation and Legal Frameworks

The decentralized nature of these platforms complicates compliance with evolving digital content regulations. Developing frameworks that balance creator rights, user protection, and platform autonomy requires ongoing dialogue between technologists and policymakers.

Security and Privacy Considerations

Robust security protocols remain essential for protecting both platform integrity and user data. Regular smart contract audits, decentralized storage solutions, and transparent data handling policies help build necessary trust in these ecosystems.

Content Moderation and Governance

Decentralized content management systems must balance community standards with censorship resistance. Innovative governance models that distribute moderation authority while preventing abuse represent an active area of development.

User Experience Optimization

Simplifying complex blockchain interactions through intuitive interfaces lowers barriers to participation. Comprehensive educational resources and streamlined content management tools can help creators focus on quality rather than technical hurdles.

Interoperability Standards

Developing common protocols for UGC portability between platforms can enhance creator flexibility and audience reach. Cross-chain compatibility solutions may emerge as critical infrastructure for decentralized content ecosystems.

Read more about The Role of Decentralized Finance (DeFi) in UGC

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content