The Impact of AI on Music Royalties Collection

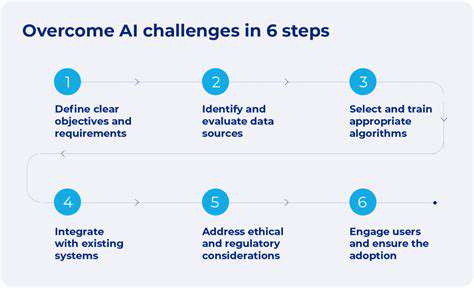

Addressing the Challenges of AI-Driven Royalty Collection

Ethical Considerations in AI Development

The rapid advancement of artificial intelligence presents significant ethical dilemmas that demand careful consideration. AI systems, particularly those designed for decision-making in critical areas like healthcare and criminal justice, must be developed and deployed responsibly to avoid unintended biases and harmful outcomes. Ensuring fairness, transparency, and accountability in AI algorithms is crucial to prevent exacerbating existing societal inequalities or creating new ones. Developers and policymakers need to establish clear ethical guidelines and frameworks to navigate the complex ethical landscape of AI.

One critical ethical concern revolves around the potential for bias in AI algorithms. These algorithms are trained on vast datasets, and if these datasets reflect existing societal biases, the AI system may perpetuate and even amplify those biases in its decisions. Addressing this requires careful data curation and algorithm design to mitigate bias and promote fairness. Furthermore, questions of accountability arise when AI systems make errors or cause harm. Determining who is responsible – the developer, the user, or the system itself – remains a complex and unresolved issue.

The Need for Robust Testing and Validation

As AI systems become more complex and integrated into various aspects of our lives, rigorous testing and validation procedures are essential. Simply put, ensuring that AI systems perform as expected and do not exhibit unintended behaviors is crucial. This involves comprehensive testing across diverse scenarios and with a wide range of inputs to identify potential weaknesses and vulnerabilities. Thorough validation processes are needed to guarantee the reliability and safety of AI systems, especially in critical applications.

Testing AI systems in real-world settings is critical to understand how they operate under less controlled conditions. This real-world testing can reveal unforeseen issues and limitations that may not emerge during controlled laboratory testing. Furthermore, ongoing monitoring and evaluation of AI systems are necessary to identify and address any emerging problems or deviations from expected performance.

Data Privacy and Security in AI Systems

The use of vast amounts of data in training and operating AI systems raises significant privacy and security concerns. Protecting user data from unauthorized access and misuse is paramount, and robust security measures must be implemented to safeguard sensitive information. Data breaches can have severe consequences, impacting individuals and organizations alike. Implementing strong encryption protocols, access controls, and secure data storage practices are crucial components of a comprehensive data protection strategy.

Ensuring transparency and control over data usage by AI systems is essential to fostering trust and confidence. Users should have clear and accessible information about how their data is being used and have the ability to control its collection and processing. Transparent data handling practices are vital for building public trust and addressing concerns about potential misuse.

The Impact of AI on Employment and the Workforce

The widespread adoption of AI is poised to significantly alter the job market, creating new opportunities while potentially displacing existing roles. Understanding and proactively addressing the challenges and opportunities associated with this technological shift is crucial for preparing the workforce for the future. Upskilling and reskilling initiatives are essential to equip individuals with the necessary skills to thrive in an AI-driven economy. The development of new roles and industries will also accompany the rise of AI, demanding different sets of expertise and capabilities.

The potential for job displacement due to automation raises serious social and economic concerns. Policies and strategies to address the potential for job losses and to support workforce transition are essential to ensure a smooth and equitable transition to an AI-powered future. Support for retraining and upskilling programs, as well as policies that encourage entrepreneurship and innovation, is paramount to mitigating the potential negative effects of AI on employment.

The Future of Music Royalties in the AI Era

The Rise of AI-Powered Music Composition

Artificial intelligence is rapidly transforming the music industry, with AI-powered tools capable of composing original music, arranging existing pieces, and even generating entire albums. This has the potential to democratize music creation, allowing individuals with limited musical training to produce professional-quality tracks. However, this shift also presents significant challenges to traditional royalty structures, as the lines between human and AI authorship blur.

The emergence of sophisticated AI algorithms capable of composing music that is indistinguishable from human-crafted works necessitates a re-evaluation of existing royalty models. How do we attribute credit and compensation when an AI algorithm, trained on a vast dataset of human-created music, produces a new piece? These complex questions will require careful consideration and potentially new legal frameworks to ensure fairness and incentivize creativity in the age of artificial intelligence.

Challenges to Traditional Royalty Models

Traditional music royalty structures, often based on the concept of human authorship and performance, are facing significant challenges in the AI era. Issues arise regarding the attribution of royalties when AI is involved in the creation or modification of music. Who is the rightful recipient of royalties – the AI developer, the user of the AI tool, or the human artist who might have provided input or refined the AI's output? This uncertainty creates a significant hurdle for the industry as it transitions to this new era.

Furthermore, the potential for widespread use of AI-generated music could drastically reduce demand for traditional musicians and performers. This could lead to a significant decline in income for artists and a restructuring of the entire music industry. Understanding the long-term implications of widespread AI adoption is critical for securing a sustainable future for artists and the music industry as a whole.

New Models for AI-Generated Music Royalties

To address the challenges presented by AI in the music industry, new models for distributing royalties are crucial. These models should consider the contributions of both human creators and AI algorithms, potentially incorporating tiered royalty systems or variable compensation based on the degree of human intervention in the creative process. Furthermore, transparent and easily accessible mechanisms for determining and distributing royalties will be essential to fostering trust and preventing disputes.

One promising approach involves establishing clear guidelines for ownership and attribution when AI is used in music creation. This might include defining specific roles and responsibilities for human composers, AI trainers, and AI users. Such guidelines would provide a framework for future disputes and ensure a more equitable distribution of revenues generated by AI-generated music.

The Future of Music Copyright and Ownership

The rise of AI-generated music necessitates a reevaluation of copyright law and ownership. As AI algorithms increasingly contribute to the creative process, legal frameworks must adapt to define and protect the rights of both human artists and AI developers. Determining the appropriate balance between copyright protection for human creativity and the need to incentivize AI innovation will be a key challenge in the years to come.

The ongoing debate about the authorship of AI-generated works will undoubtedly shape the future of music copyright. This will require interdisciplinary collaboration between legal experts, musicians, AI developers, and policymakers to establish a framework that is both fair and conducive to the continued growth and development of the music industry in the age of AI.

Read more about The Impact of AI on Music Royalties Collection

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content