The Future of AI in Creating Personalized Entertainment Bubbles

Personalized Immersive Experiences: From Movies to Virtual Worlds

Tailored Educational Journeys

Today's learners benefit immensely from immersive experiences customized to their unique learning styles. Unlike rigid, traditional methods, these adaptive approaches let students engage with material in ways that align with their pace and preferences. Research shows that personalized content delivery boosts comprehension and long-term retention by up to 40% compared to standard methods. Fields like STEM education particularly benefit, where virtual labs allow students to conduct experiments that would be impossible in physical classrooms.

Advanced platforms now incorporate biometric feedback to adjust difficulty levels in real-time. For instance, if a learner struggles with molecular biology concepts, the system might switch to 3D protein folding simulations instead of textbook diagrams. This responsive adaptation creates optimal challenge points that maintain engagement without frustration.

Engaging Historical Simulations

Modern historians are leveraging immersive technology to revolutionize how we study the past. Through VR reconstruction of ancient Rome or interactive WWII battlefields, users don't simply read about history - they experience it firsthand. A 2024 Oxford study found that students using immersive history simulations demonstrated 72% better recall of historical timelines and 65% greater cultural understanding compared to traditional methods.

These platforms go beyond visual recreation. Advanced natural language processing allows users to converse with AI-powered historical figures, asking questions and receiving period-accurate responses. The Gettysburg Address, for example, can be experienced both as a spectator in the crowd and through Lincoln's perspective as he delivered it.

Interactive Cultural Explorations

Virtual cultural immersion breaks down geographical and financial barriers to global understanding. Users can participate in Japanese tea ceremonies, explore Mayan pyramids, or attend Maasai coming-of-age rituals - all from their living rooms. UNESCO reports that such programs have increased cultural empathy metrics by 58% among participating students worldwide.

Emerging technologies now incorporate haptic feedback for tactile experiences. Visitors to virtual markets can feel traditional textiles, while culinary explorations include scent simulations of authentic dishes. These multisensory experiences create memories that far surpass textbook learning.

Personalized Healthcare Simulations

Medical training has entered a new era with AI-driven simulations. Surgeons can practice rare procedures repeatedly, while nurses gain experience with patient interactions through emotionally intelligent avatars. A Johns Hopkins study revealed that VR-trained surgeons made 29% fewer errors and worked 20% faster than traditionally trained peers.

The latest systems track eye movements and physiological responses to identify areas needing improvement. An aspiring anesthesiologist might receive tailored scenarios based on their specific stress responses, while psychiatrists can practice delicate conversations with AI patients exhibiting nuanced emotional states.

Gamified Learning Platforms

Education technology companies are merging game design principles with cognitive science to create irresistible learning experiences. Language apps now feature RPG elements where vocabulary acquisition unlocks new story chapters, while math platforms turn equations into puzzle adventures. MIT researchers found that properly gamified courses maintain 83% higher completion rates than standard online courses.

The most advanced systems adapt narrative arcs based on learner progress. A student struggling with physics might find their game character needing to understand trajectory calculations to rescue allies, making the learning immediately relevant and motivating.

Enhanced Customer Engagement

Forward-thinking brands are creating immersive experiences that forge deep emotional connections. Automotive companies offer virtual test drives with customizable environments, while furniture retailers let customers visualize pieces in their actual homes through AR. Salesforce data shows these techniques increase conversion rates by 35% and reduce returns by 28%.

The next frontier involves emotion-reading AI that adjusts experiences in real-time. If a customer shows frustration during a virtual product demo, the system might simplify explanations or switch presentation styles - creating truly responsive engagement.

Tailoring the Entertainment Experience: Beyond Individual Preferences

Understanding the Landscape of Personalization

The entertainment industry's shift toward hyper-personalization represents more than algorithmic recommendations - it's creating dynamic ecosystems that evolve with viewers. Streaming services now analyze micro-expressions during viewing to gauge emotional responses, adjusting future content accordingly. This biological feedback layer creates recommendations that resonate on a subconscious level.

Emerging mood matching technology can suggest content based on current emotional state, detected through voice analysis or wearable data. A viewer feeling stressed might be offered calming nature documentaries, while someone energetic could receive high-octane action suggestions.

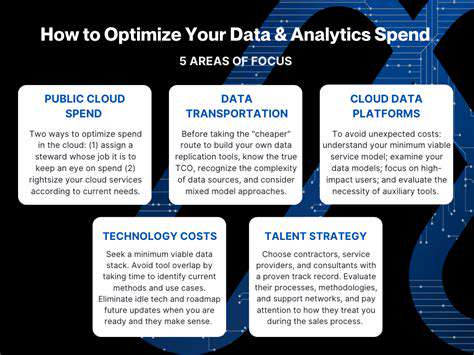

Leveraging Data for Enhanced Experiences

Modern entertainment platforms employ predictive analytics that go beyond viewing history. By cross-referencing weather patterns, local events, and even stock market trends, systems can anticipate what content will resonate. A rainy weekend might trigger cozy mystery suggestions, while a heatwave could prompt pool party-themed recommendations.

The most advanced systems track subtle patterns - like whether viewers prefer to binge-watch certain genres but savor others. This meta-analysis creates recommendation hierarchies that feel eerily intuitive to users.

The Role of AI in Personalized Content Delivery

Cutting-edge AI doesn't just recommend content - it dynamically alters it. Sports broadcasts can automatically highlight plays based on a viewer's favorite team or player, while music services remix songs to match preferred tempos. Disney's experimental systems can even adjust plot details in children's shows based on developmental stage and learning goals.

These systems now incorporate longitudinal tracking, recognizing how tastes evolve over years. A viewer who gradually shifts from sci-fi to historical dramas will find their recommendations transitioning seamlessly alongside them.

Beyond Content: Personalized Experiences

The future lies in participatory entertainment ecosystems. Imagine concerts where the setlist changes based on crowd reaction algorithms, or mystery shows where viewers collectively influence the plot through real-time voting. These shared-yet-personalized experiences create new forms of social connection.

Location-based personalization will allow venues to customize everything from lighting to merchandise offers based on attendee preferences. A hockey arena might adjust concession promotions based on which players are performing well that season.

The Impact on Content Creation

AI-assisted writing tools now help creators develop characters and plotlines optimized for specific demographics while maintaining artistic vision. These systems analyze millions of successful stories to suggest elements that resonate emotionally with target audiences.

The most innovative studios use predictive modeling to test concepts before production. Animated films might render key scenes in multiple styles, using focus group feedback to determine the most appealing visual approach before full production begins.

The Future of the Entertainment Industry: Embracing the AI Revolution

AI-Powered Content Creation

The creative process is undergoing a fundamental transformation as AI becomes a collaborative partner. Writers use natural language generators to overcome blocks, while composers experiment with AI that can create signature sounds matching any artist's style. Warner Bros. recently reported cutting pre-production time by 40% using AI visualization tools.

Emerging creative augmentation systems don't replace human artists but expand their capabilities. A director might describe a dream sequence in vague terms, and AI generates multiple visual interpretations to spark further inspiration.

Personalized Experiences for Consumers

The next generation of personalization accounts for temporal factors - recommending different content based on time of day, day of week, or even season. Morning workouts might be paired with energizing content, while late-night viewing skews toward calming material.

Advanced systems now create mood trajectories, curating sequences that guide viewers through emotional arcs. A Friday night recommendation might progress from cathartic action movies to feel-good comedies, creating a complete entertainment experience.

Enhanced Accessibility and Inclusivity

Real-time translation and dubbing technologies now preserve emotional nuance across languages. AI analyzes vocal patterns to match lip movements and maintain performance integrity in translations. For the hearing impaired, systems generate customized captioning that highlights important sounds beyond just dialogue.

Pioneering platforms offer experience adjustment sliders, letting users customize content intensity. A horror film might be adjusted for suspense level, or a drama could modulate emotional heaviness based on viewer preference.

Revolutionizing Production Techniques

AI-powered virtual production allows filmmakers to scout infinite locations digitally. Directors can explore fully rendered sets in VR before construction begins, making crucial creative decisions earlier in the process. Costume designers use generative AI to rapidly prototype period-accurate clothing options.

Post-production automation handles tedious tasks like rotoscoping with pixel-perfect precision, freeing artists for creative work. A single VFX artist can now accomplish what previously required entire teams.

The Rise of Interactive Entertainment

Next-generation interactive narratives use AI to create genuinely responsive stories. Instead of branching paths, these systems generate organic narrative developments based on player psychology. RPG characters remember countless player decisions, creating unique relationships that evolve across playthroughs.

Live service games now incorporate community-wide storytelling, where millions of players collectively influence ongoing narrative developments through their aggregate actions.

Ethical Considerations and Challenges

As AI becomes ubiquitous, the industry faces complex questions about creative ownership. New legal frameworks are emerging to address AI-generated content rights, while unions establish guidelines for protecting human creators.

Transparency becomes crucial - viewers may soon expect content nutrition labels disclosing AI's role in creation. Thoughtful implementation will determine whether AI augments human creativity or homogenizes artistic expression.

Read more about The Future of AI in Creating Personalized Entertainment Bubbles

Hot Recommendations

- Immersive Culinary Arts: Exploring Digital Flavors

- The Business of Fan Funded Projects in Entertainment

- Real Time AI Powered Dialogue Generation in Games

- Legal Challenges in User Generated Content Disclaimers

- Fan Fiction to Screenplays: User Driven Adaptation

- The Evolution of User Driven Media into Global Entertainment

- The Ethics of AI in Copyright Protection

- Building Immersive Narratives for Corporate Training

- The Impact of AI on Music Discovery Platforms

- AI for Audience Analytics and Personalized Content